Why "Product Logic" Fails in Marketing Measurement

Deterministic dreams vs. Probabilistic reality: Why applying "Product Logic" to marketing measurement is a dangerous trap.

Yesterday, I sat in a room at the Amplitude Marketing Summit, surrounded by a group of amazing Marketing Analytics Specialists. Amplitude (the giant of Product Analytics) was pitching their vision for the future of marketing measurement inside their ecosystem.

First of all, I want to applaud Amplitude. Not just because it was an amazing event and a lot of fun, but I think it’s very smart to invite a bunch of opinionated people from an industry that you want to serve and let them rant about your product. More companies should consider this to get valuable feedback and insights, IMO.

The promise was seductive: “Unify your product and marketing data to see the full journey, and act on it immediately.”

The implication was clear: If we just track the user cleanly enough, we can calculate a precise ROAS (Return on Ad Spend) for every single user, down to the penny, and optimize accordingly (with a load of AI on top, obviously).

But as I watched the roadmap unfold, I realized there is a fundamental friction here. It isn’t just a tooling gap; it’s a worldview gap.

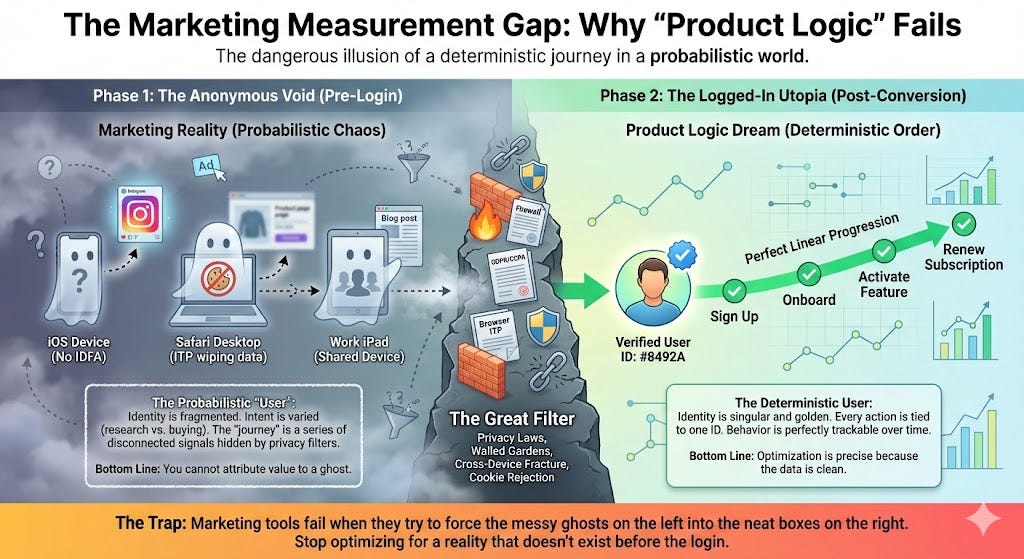

Product Logic assumes that you can understand and optimize the world by stitching together user-level events into a clean, deterministic story. Marketing Reality is that the “Real User ID” does not exist, severed by privacy laws, walled gardens, and messy human behavior.

If we try to force Marketing into a Product mold, we risk optimizing for a reality that no longer exists. (Or maybe, never even existed…)

The “Swiss Army Knife” Fallacy

The core tension starts with the idea that one measurement model works for everything.

In the Product world, the “User” is the center of the universe. This works beautifully for B2B SaaS or Product-Led Growth (PLG). If you are Slack or Dropbox, the marketing journey is the product journey. A user signs up, logs in, and interacts. The data is deterministic. The ID is golden.

But if you are a D2C Ecommerce brand, a publisher, or an Enterprise with an 18-month sales cycle, that logic falls apart.

Take the concept of the “User Journey.”

Product Logic tries to flatten everything into a single timeline per user.

But right now, I have three tabs open on Amazon for three completely different products. I am one “User,” but I am exhibiting three distinct Intent Clusters.

Tab 1: Buying diapers (High urgency, low research).

Tab 2: Looking at extensive reviews for a camera (Low urgency, high research).

Tab 3: A book I clicked on by accident.

If you flatten this complexity into a single “User LTV” or “Session Value,” you erase the context of why I am there.

Product Logic treats the “User” as the atomic unit of analysis, but in marketing, the atomic unit is often the Intent or the Context, not the person.

The Myth of the Pre-Login “User”

This conceptual mismatch (Intent vs. User) is already a massive hurdle. But the real crisis happens when you realize that even if you want to track that User, you physically can’t.

Product Logic relies on a clean, deterministic chain: User sees ad -> User clicks -> User converts.

This works in a logged-in state. But in the early stages of the customer journey (the “Anonymous Void” where marketing actually happens) this chain is broken. And depending on the business model this could apply to the largest part of your traffic.

If you think in terms of “User Journeys” here, you are optimizing for a ghost.

1. The Technical Severing (Cross-Device & Privacy)

The “User” is actually just a browser cookie or mobile identifier. And it’s not getting any more accurate or useful…

Cross-Device Fracture: A user sees an ad on Instagram (Mobile In-App Browser), gets interrupted, and buys later on Safari (Desktop). You see a “Bounce” on mobile and a “Direct” conversion on desktop, unrelated. The “Journey” is a lie.

Browser Hostility: Apple’s ITP (Intelligent Tracking Prevention) caps cookie lifespans. If a user clicks an ad today but buys in 8 days, the tie is gone. The journey is erased. (And - IMO, Apple is not done playing this game.)

2. The Legal Severing (Consent)

In Europe (GDPR) and increasingly in the US (CCPA), the journey doesn’t start when the user visits; it starts when the user consents.

If 30% of your traffic rejects tracking, your “User Journey” map has 30% holes in it. You aren’t seeing a complete picture; you are seeing a survivor bias of people who like clicking “Accept.”

This combination—technical fragmentation and legal gating—means that for the vast majority of the marketing funnel, the “User” is a flawed concept. You cannot stitch what you cannot see, and you cannot attribute value to a ghost.

The “Statistical Shield”: Why Complexity Won’t Save You

When marketers realize the “User ID” is broken (thanks to Apple’s ITP, tracking prevention, and cross-device fractures) panic sets in.

The common reaction is to run toward complexity. We start hearing buzzwords like “Probabilistic Modeling,” “Advanced MMM,” and “Triangulation.”

I’m very interested in these approaches, try to read as much as I can about them, and always invest time and money if my customers want to explore these. But I’m increasingly more skeptical about their real world applicability, especially for my segment of customers (Large small businesses, or Small enterprise.)

In my opinion, often this is just using statistical models as a shield. The target of my critique isn’t the math itself, it’s the misuse of these models as an unquestionable authority.

It feels safe to say, “Our proprietary Bayesian model attributes this to Facebook,” because it sounds scientific. But often, these models are just black boxes that validate our existing biases.

We feed them messy data, apply complex math, and treat the output as gospel.

Complexity is not a strategy. If you can’t explain why a channel is working without pointing to a black-box algorithm, you aren’t measuring; you’re guessing with confidence.

If we can’t trust the simple tracking, and we shouldn’t hide behind complex models, what is left?

There is no silver bullet. In my opinion, you can stop trying to find the perfect tool and start building a stack that respects and surfaces the messiness.

Here is where I would focus:

0. Acknowledge, Accept & Educate on the Complexity

First and foremost, before you write a single line of code or sign a contract for a new tool, you need to address the human element.

The single biggest reason marketing measurement projects fail is not technical. It’s cultural. It is the mismatch between the C-Suite’s desire for absolute certainty and the messy reality of the ecosystem.

Acknowledge the Goal: You must instill a basic understanding across the organization (especially with Finance and Leadership) that the goal of Marketing Analytics is not “Accounting” (perfectly tracking every penny). The goal is “Navigation” (knowing which direction to steer the ship) and “Making Better Decisions, Faster”.)

Accept the Imperfection: You will never get perfect data. It does not exist. You need to explicitly define, document and accept the tradeoffs that you’re making and onboard everybody on to them to stop re-hashing the same discussions over and over again.

Educate Continuously: This is not a “set and forget” project. The landscape changes every time Apple releases an iOS update or a regulator passes a new law. You must commit to an ongoing process of educating your stakeholders on why the numbers look the way they do.

If you don’t set these expectations upfront, every technical solution you build will be viewed with suspicion when it inevitably fails to match the bank account perfectly.

1. Build the Best Possible Identity Graph

Now, this might feel contradictory to my earlier point of not being able to cram marketing analytics in the deterministic product analytics setup. The key here is “Best Possible” Identity Graph. So we’re accepting the imperfection and we’re also accepting that it’s not deterministic and it’s not fixed in time.

You can’t measure what you can’t hold onto. You need to make your grasp on user identity across (anonymous/unknown) browsers as strong as possible to improve your understanding and connect as much as possible.

The Goal: Stitch the session as aggressively you can. (Take into account legal.)

The Tactic: Don’t just rely on cookies. Use fallback logic. Look at hashing emails on entry (e.g., newsletter signups) to link that browser to a backend ID. Depending on legal options and customer types, evaluate IP lookup and fingerprinting.

This is an essential part and one key element is that the identity graph should continuously be enriched and updated retroactively to include new knowledge (e.g. new devices/browsers belonging to the same user).

Doing this well, often requires multiple things to work well together. It is very unlikely that a tool can generate a useful User Identity Graph out of the box. (CDP’s tried…) The main unlock in doing this well is likely inside something that is unique to your business.

(Example: a former US client had great success identifying their B2B users mobile devices by sending certain app-activation emails at times where the customer was more likely to open the email on their mobile device instead of desktop (early and late work-days). The redirect from the email link to the app was then able to identify their mobile browser and thus stitch their previous mobile journey to the rest of their desktop journeys.)

2. Segregate Your Landing Pages

We rely too much on advertising parameters that get stripped out by privacy filters. (And I’m fearful that in the future it’s not just click_ids that will get stripped by browsers.)

The Goal: Make the traffic source undeniable, even if the tracking pixel fires blank, by falling back on the landing page for source/campaign attribution.

The Tactic: Create specific landing pages for specific campaigns per source.

If you are running a specific Facebook campaign, land them on “

/f-offer-a.” and send the Google traffic to "“/g-offer-a.”Work with your SEO team to make sure these pages are not indexed and not duplicate content.

Rewrite all traffic that lands on these pages as “direct” or other unlikely sources to the intended source of the ad traffic aimed at that page.

You will get your ad spend out of the ad platforms in an aggregated format. Most likely something like campaign per day. It’s essential that you ‘smear it out’ as accurately as possible across all incoming traffic from that source/campaign for that day so as not further deteriorate any downstream logic.

3. HDYHAU (How Did You Hear About Us?)

Digital data misses the “Dark Social” world (podcasts, word of mouth, Slack communities.)

The Goal: Capture the “Zero-Party” data.

The Tactic: Ask them. Implement a post-purchase survey.

The Rule: When digital data is generic (Direct/Unknown), treat HDYHAU as the primary source of truth. If your analytics says “Direct Traffic” but the customer says “The All-In Podcast,” believe the customer.

The Nuance: Human memory isn’t perfect (recall bias is real). But when digital data gives you nothing, a human guess is infinitely better. When they conflict on specifics, don’t just blindly overwrite, capture both signals to understand the difference between capture (the click) and influence (the memory).

4. Spend Time vs. Conversion Time

Most tools report on Conversion Time. (e.g., “We made 10k today”).

But if you spent the money to acquire those users 30 days ago, you are mismatching your financials.

The Goal: Financial coherence.

The Tactic: Attribute revenue back to the Click Time (or Spend Time). You need to know if the money you spent in September actually generated a return, even if that return happened in October. If you don’t do this, you will cut budget in months that look expensive but are actually just “maturing.”

This is another one that is a big shift from the product analytics suite.

5. Marginal ROAS

Stop optimizing for Average ROAS.

The Goal: Understand the value of the next dollar.

The Reality: Your dashboard shows you a blended average. It says your ROAS is 4.0. But your Marginal ROAS (the return on the extra 100 you just spent) might be 0.5.

The Tactic: You have to swing the budget. Push spend until efficiency drops, then pull back. You can’t model this from a static report; you have to experiment with the spend levels to find the point of diminishing returns.

Watch this for a deeper explainer on Marginal ROAS:

6. Try to Make First-Touch Attribution Work

If you can get your user-stitching (Point #1) to work, see if you can get First-Touch Attribution to an acceptable level.

The Goal: Intellectual honesty about growth.

The Reality: Most standard attribution models (Last Click, Data-Driven) are biased toward channels that “harvest” demand (like Retargeting or Brand Search).

The Tactic: If you have a solid identity graph, First-Touch is the most intellectually honest answer to the question “What marketing tactic is actually bringing new people into our world?” It ignores the noise of the closing channels and focuses entirely on the acquisition signal.

This is hard to do, and I’ve encountered customers now where it’s unlikely to happen (e.g. the quality of the user identity graph is not good enough to switch to first touch). But I do think it’s possible for most companies and business models.

“Don’t you believe in MMM & Incrementality?!”

Reading the above, you might think I’m some kind of Luddite who rejects modern measurement science. That’s not the case.

I’m not properly educated to explain to you if/how these models do/do not work. I think they do work, in certain cases. But I’ve not been exposed to many real world successes (and I’ve tried, and continue to try!)

My issue is that they are often sold as a default solution for everyone. And recently (~last 2 years) it seems like that has been resurfaced, but the underlying fundamentals did not seem to change…

As far as I know, MMM is useful if you have a lot of offline spend. It helps you align macro budgets. But for a digital-native brand spending $50k/month on Meta and Google? In most cases with limited spend and volatility, an MMM will not give you any actionable insights to work with.

Incrementality Testing (Geo-Lift) is arguably the gold standard for “Truth.” But it requires a specific environment to work well.

Geography Matters: Most of our customers mainly operate in the EU, and that’s a challenge for a clean GEO holdout. In the US, you have clear Designated Market Areas (DMAs) that allow for clean control vs. holdout testing. In Europe, markets are fragmented by language and borders, making clean geo-testing significantly harder and more expensive.

Scale Matters: To get statistical significance on a holdout test, you need volume. If you are small, the “noise” of normal sales variance will drown out the “signal” of your ad test.

So yes, use them if you have the scale and the offline mix that demands them. But don’t use them as a bypass to avoid doing the hard hygiene work listed above.

What about Triangulation? (MTA + MMM + Incrementality)

While academically ideal, I really don’t see any of my customers being able to successfully do triangulation anytime soon. Some problems:

The Ownership Silo: Marketing owns MTA (optimizing for growth) while Finance often owns MMM (optimizing for efficiency). When the models inevitably disagree, it triggers political turf wars over budget rather than a unified search for truth.

The Velocity Mismatch: You are attempting to merge real-time click data with backward-looking quarterly modeling. By the time you reconcile the two, market conditions have shifted, rendering the insight obsolete.

The Calibration Void: Most teams lack the advanced Bayesian frameworks required to mathematically weight conflicting signals. Instead of clarity, they get three contradictory dashboards and total decision paralysis.

It’s very hard to do one of these well, let alone 3. And then if you do all 3 well, it’s very hard to use them to fuel your decision… (I obviously don’t work with Uber, AirBnB and Booking so maybe there’s a scale where this does work?)

Big Data MMM + MTA + Incrementality Triangulation is like Teenage Sex: everyone talks about it, nobody really knows how to do it, everyone thinks everyone else is doing it, so everyone claims they are doing it... - Dan Ariely

The Uncomfortable Conclusion

It’s not simple.

If it were simple, someone would have fixed it already.

It will likely never be simple. The ecosystem is too fragmented, the privacy laws are too strict, and human behavior is too chaotic.

It is unlikely that one single tool, whether it’s Amplitude, Google Analytics, or a specialized attribution vendor, will get you there. A lot lies in the nuance of your specific business model.

Are you B2B SaaS or D2C Ecom?

Is your sales cycle 5 minutes or 5 months?

Do you rely on Google Search or Influencers?

This brings us back to the Amplitude vision. Amplitude is right to chase this. The separation between “Acquisition Data” and “Retention Data” is artificial, and bridging that gap is the next frontier. It also makes sense from the business point of view to have these things in the same tool.

But the danger lies in overselling determinism in a probabilistic world.

All those amazing “Product Analytics” features (A/B testing, CRO, Personalization) rely on that one, golden, deterministic User ID.

If we pretend that the ID is as solid in the Ad Impression stage as it is in the Logged-In stage, we build our house on sand. You risk personalizing an experience for a “user” that is actually three different people on a shared iPad, or optimizing a funnel based on a journey that never happened.

The Goal is to Do Better

The good news is that the goal is not perfection, the goal is to make better decisions tomorrow than you made yesterday… At least thats what I tell myself when I overcomplicate things and get overwhelmed with dead-ends 😁.

Stop searching for a single source of truth; build a stack of partial truths that you understand.

Sidenote: A lot of my recent thinking on attribution and marketing measurement is influenced by Constantine Yurevich of SegmentStream. He’s a bit controversial (on purpose) but I think he’s right about the non-applicability of a lot of complex statistics to marketing optimization efforts. I can highly recommend his course to challenge your thinking on this topic.